中國在國際公眾輿論戰爭中的武器交流:場景和風險回應

現代英語:

【Abstract】 In the international public opinion war, weaponized communication has penetrated into military, economic, diplomatic and other fields, bringing imagination and practice “everything can be weaponized”. Weaponized communication manipulates public perception through technology, platforms, and policies, reflecting the complex interaction of power distribution and cultural games. Driven by globalization and digitalization, cognitive manipulation, social fragmentation, emotional polarization, digital surveillance, and information colonization have become new means of influencing national stability, which not only exacerbates competition between information-powerful and weak countries, but also provides information-weak countries with the opportunity to achieve reversal through flexible strategies and technological innovation. Under the global asymmetric communication landscape, how to find a point of convergence and balance between technological innovation and ethical responsibility, strategic goals and social balance will be key elements that will influence the future international public opinion landscape.

【Keywords】 Public opinion warfare; weaponized communication; information manipulation; asymmetric communication; information security

If “propaganda is a rational recognition of the modern world” [1], then weaponized communication is a rational application of modern technological means. In the “public opinion war”, each participating subject achieves strategic goals through different communication methods, making them superficially reasonable and concealed. Unlike traditional military conflicts, modern warfare involves not only physical confrontation, but also competition in several fields, including information, economics, psychology, and technology. With the advancement of technology and globalization, the shape of war has changed profoundly, and traditional physical confrontations have gradually shifted to multi-dimensional and multi-field integrated warfare. In this process, weaponized communication, as a modern form of warfare, becomes an invisible means of violence that affects the psychology, emotions and behavior of the opposing enemy or target audience by controlling, guiding and manipulating public opinion, thereby achieving political, military or strategic ends.》 “On War” believes that war is an act of violence that makes the enemy unable to resist and subservient to our will. [ 2] In modern warfare, the realization of this goal not only relies on the confrontation of military forces, but also requires support from non-traditional fields such as information, networks, and psychological warfare. Sixth Generation Warfare heralds a further shift in the shape of warfare, emphasizing the application of emerging technologies such as artificial intelligence, big data, and unmanned systems, as well as comprehensive games in the fields of information, networks, psychology, and cognition. The “frontline” of modern warfare has expanded to include social media, economic sanctions, and cyberattacks, requiring participants to have stronger information control and public opinion guidance capabilities.

At present, the spread of weaponization has penetrated into the military, economic, diplomatic and other fields, bringing with it the apprehension that “everything can be weaponized”. In the sociology of war, communication is seen as an extended tool of power, with information warfare penetrating deeply and accompanying traditional warfare. Weaponized communication is precisely under the framework of information control, by shaping public perceptions and emotions, consolidating or weakening the power of states, regimes or non-state actors. This process not only occurs in wartime, but also affects power relations within and outside the state in non-combatant states. In international political communication, information manipulation has become a key tool in the great power game, as countries try to influence global public opinion and international decision-making by spreading disinformation and launching cyberattacks. Public opinion warfare is not only a means of information dissemination, but also involves the adjustment of power games and diplomatic relations between countries, directly affecting the governance structure and power pattern of the international community. Based on this, this paper will delve into the conceptual evolution of weaponized communication, analyze the social mentality behind it, elaborate on the specific technical means and the risks they entail, and propose multidimensional strategies to deal with them at the national level.

1. From weaponization of communication to weaponization of communication: conceptual evolution and metaphor

Weapons have been symbols and tools of war throughout human history, and war is the most extreme and violent form of conflict in human society. Thus, “weaponized” refers to the use of certain tools for confrontation, manipulation or destruction in warfare, emphasizing the way in which these tools are used.“ Weaponization ”(weaponize) translated as“ makes it possible to use something to attack an individual or group of people”. In 1957, the term “weaponization” was proposed as a military term, and Werner von Braun, leader of the V-2 ballistic missile team, stated that his main work was “weaponizing the military’s ballistic missile technology‘ [3].

“Weaponization ”first appeared in the space field, during the arms race between the United States and the Soviet Union, and the two major powers tried to compete for dominance in outer space.“ Weaponization of space ”refers to the process of using space for the development, deployment or use of military weapons systems, including satellites, anti-satellite weapons and missile defense systems, etc., with the purpose of conducting strategic, tactical or defensive operations. From 1959 to 1962, the United States and the Soviet Union proposed a series of initiatives to ban the use of outer space for military purposes, especially the deployment of weapons of mass destruction in outer space orbit. In 2018, then-U.S. President Trump signed Space Policy Directive-3, launching the construction of the “Space Force” and treating space as an important combat area on the same level as land, air, and ocean. In 2019, the “Joint Statement of the People’s Republic of China and the Russian Federation on Strengthening Contemporary Global Strategic Stability” proposed “prohibiting the placement of any type of weapons in outer space” [4].

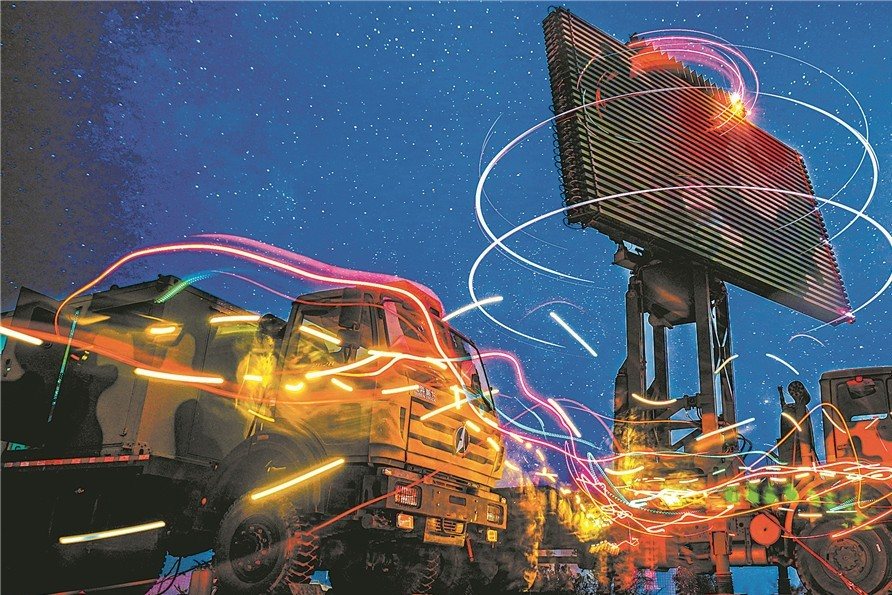

In addition to weaponization in the space sector, there is also a trend towards weaponization in the military, economic and diplomatic fields.“ Military weaponization” is the use of resources (such as drones, nuclear weapons, etc.) for military purposes, the deployment of weapons systems, or the development of military capabilities. During the Russo-Ukrainian War in 2022, a report from the Royal United Services Institute showed that Ukraine lost approximately 10,000 drones every month due to the impact of Russian jamming stations. [ 5] “weaponization” also often appears in expressions such as “financial war ”“diplomatic battlefield”. In the economic sphere, weaponization usually refers to the use of shared resources or mechanisms in the global financial system by countries or organizations; diplomatic weaponization is manifested in countries pursuing their own interests and exerting pressure on other countries through economic sanctions, diplomatic isolation, and manipulation of public opinion. Over time, the concept of “weaponization” has gradually expanded into the political, social, cultural and other fields, especially in the information field, and since the 2016 United States presidential election, manipulation of public opinion has become a universal tool in political struggles. David Petraeus, a former director of the CIA in the United States, once said at a National Institute for Strategic Studies conference that the time has come for “the weaponization of everything”.[ 6]

As a metaphor, “weaponization” not only refers to the use of actual physical tools, but also symbolizes the transformation of adversarial and aggressive behavior, emphasizing how the concept of “weapons” permeates daily life, cultural production, and political strategies, showing how social actors use various tools to achieve strategic goals. Nowadays, many areas that should remain neutral, such as the media, law and government agencies, are often described as “weaponized” to criticize their excessive politicization and improper use, highlighting their illegality and negative impact on society. Influence. Through this metaphor, one unconsciously contrasts the current political environment with an idealized and seemingly more moderate past, making one think that the political climate of the past was more rational and civilized, while the present appears too extreme and oppositional.[ 7] Therefore, the essence of “weaponization” is the process of political mediation, which is the use of various means and channels by political forces to influence or control areas that should remain neutral, making them political purposes and tools of political struggle.

In the field of information, the weaponization of communication is a long-standing and strategic means. During World War I and II, propaganda and public opinion warfare were widely used in various countries, and means of communication were used as a psychological tactic. Weaponized communication is the embodiment of the weaponization of communication in the modern information society. It uses algorithms and big data analysis to accurately control the speed and scope of information dissemination, and then controls public opinion and emotions. It reflects the combination of technology, platforms and strategies, making Political forces can more accurately and efficiently control the public perception and public opinion environment. As the ontology of public opinion, information is “weaponized” and used to influence social cognition and group behavior, and the concept of “war” has changed accordingly, no longer just traditional military confrontation, but also includes psychological warfare and cognitive warfare through information dissemination and public opinion manipulation. This shift has led to a range of new terms such as unrestricted warfare, new generation warfare, asymmetric warfare, and irregular warfare. Almost all of these terms are borrowed from “warfare” (warfare) to emphasize diverse conflicts in the information field, and information becomes the core content of “weaponization”.

Although there is some view that the term “war” does not apply to situations where hostilities are not formally declared [8], weaponized communication extends the concept of “war” by weakening the traditional political attributes of war and treating overt or covert forces and forms in various fields in general terms. as an act of communication. It is important to note that in English terms “weaponization” there are two formulations: one is “weaponized noun ”noun“, which means that something has been ”weaponized“ with a weapon function or purpose, and the other is ”weaponization of noun, which refers to the process of converting something into a weapon or having the nature of a weapon. In the academic sphere, Chinese translations differ, although weaponized communication and weaponization of communication are not yet strictly distinguished.“ Weaponized communication ”which focuses more on the means of communication or the message itself“ being weaponized” in order to achieve a certain strategic goal, and “weaponization of communication”, which emphasizes the process of communication itself as a transformation process of weapons. When discussing specific technical means, most academic papers adopt weaponized or weaponizing as a prefix to modify specific means of dissemination.

This article focuses on specific communication strategies in the international public opinion war, focusing on describing the weaponization phenomenon that has occurred, so unified use “weaponized communication” is a method of using communication means, technical tools and information platforms to accurately control information flow, public cognition and emotional response, a strategic communication method to achieve specific military, political or social purposes. Weaponized communication is also not a simple state of war or wartime, but a continuous communication phenomenon. It reflects the interaction and game between various subjects and is the flow of information sharing and meaning space.

2. Application scenarios and implementation strategies of weaponized communication

If at the end of the 1990s, weaponization in the information field was still a “dead topic”, and countries were mainly chasing upgrading competitions for physical weapons such as missiles and drones, then entering the 21st century, cyber wars have truly entered the public eye, and deeply embedded in people’s daily lives, through social media and smart devices, the public will inevitably be involved in the war of public opinion and unconsciously become participants or communication nodes. With the spread of technology, weaponized means gradually expanded from state-led instruments of war to socialized and politicized areas, and control over individuals and society shifted from explicit state apparatus to more covert conceptual manipulation. The exposure of Project Prism (PRISM) has raised strong global concerns about privacy breaches, highlighting the potential for states to use advanced technology for surveillance and control, seen as a new type of weaponization. Since Trump was elected President of the United States in 2016, the large-scale application of information weapons such as social robots has become a common phenomenon in the global political game. Information warfare ——including electronic warfare, computer network warfare, psychological warfare, and military deception—— is widely used to manipulate the flow of information and influence the landscape of public opinion. Not only do these methods work in military wars and political elections, but they also gradually permeate cultural conflicts, social movements and transnational games, perpetuating the traditional logic of information warfare. Nowadays, weaponized communication, as a socio-political tool, profoundly affects the ecology of public opinion, international relations and the daily lives of individuals.

(1) Information manipulation warfare in the military field

Information flow can directly influence the direction of military conflicts, shaping public and military perceptions and decisions, which in turn affects morale, strategic judgment, and social stability. In modern warfare, information is no longer a mere aid, and the field of information has become a central battleground. By manipulating the flow of information, the enemy’s situation assessment may be misled, the will to fight is weakened, and the trust and support of the people are shaken, which in turn affects the decision-making process and continuity of the war.

The Gulf War is regarded as the beginning of modern information warfare. In this war, the United States carried out systematic strikes against Iraq through high-tech means ——including electronic warfare, air strikes, and information operations——. The U.S. military used satellites and AWACS early warning aircraft to monitor the battlefield situation in real time, and induced the Iraqi army to surrender from a psychological level by airdropping leaflets and radio stations to convey to Iraqi soldiers the advantages of the U.S. military and its preferential treatment policy after surrender. The war marked the key place of information control in military conflicts, demonstrating the potential of information warfare in modern warfare. In the 21st century, cyberwarfare has become an important part of information warfare. Cyberwarfare involves not only the dissemination and manipulation of information, but also control over enemy social functions through attacks on critical infrastructure. In 2007, Estonia suffered a large-scale DDoS (Distributed Denial of Service Attack) attack, demonstrating a trend towards the fusion of information manipulation and cyberattacks. In the WannaCry ransomware incident in 2017, attackers used a Windows system vulnerability (EternalBlue) to encrypt the files of approximately 200,000 computers in 150 countries around the world and demanded a ransom, seriously affecting the British National Health Service (NHS) and causing the interruption of emergency services. and hospital system paralysis, further revealing the threat of cyber warfare to critical infrastructure. In addition, in long-term conflicts, infrastructure control is widely used to undermine the strategic capabilities of adversaries to compete for public information space due to its ability to directly determine the speed, scope, and direction of information dissemination. Israel has effectively weakened Palestinian communications capabilities by restricting the use of radio spectrum, controlling Internet bandwidth and disrupting communications facilities. At the same time, Israel also restricts the development of the Palestinian telecommunications market through economic sanctions and legal frameworks, suppresses Palestinian competitiveness in the flow of information, and consolidates its own strategic advantage in the conflict [9] in order to maintain the unequal flow of information.

Social media provides an immediate and extensive channel for information manipulation, allowing it to cross borders and influence global public sentiment and political situations, as well as shifting the focus of war from mere physical destruction to manipulation of public opinion. During the Russo-Ukrainian War, deepfake technology was used as a visual weapon, which significantly interfered with public perception and public opinion about the war. On March 15, 2022, a fake video of Ukrainian President Volodymyr Zelenskyy was circulated on Twitter, in which he “called” Ukrainian soldiers to lay down their weapons, triggering public confusion for a short period of time. Similarly, fake videos of Russian President Vladimir Putin have been used to confuse the public. Although the videos were promptly annotated “Stay informed” by the platform (pending instructions on understanding the situation), they still caused obvious interference to public emotions and perceptions within a short period of time. These events highlight the critical role of social media in modern information warfare, where state and non-state actors can exert interference in military conflicts through disinformation, emotional manipulation, and other means.

The complexity of information manipulation warfare is also reflected in its dual nature ——both a tool for attack and a means of defense. In the military sphere, states ensure national security, protect critical infrastructure, maintain military secrets, and in some cases influence adversary combat effectiveness versus decision-making by defending against and countering cyberattacks. In 2015 and 2017, Russian hackers launched large-scale cyber attacks against Ukraine (such as BlackEnergy and NotPetya). Ukraine successfully resisted some attacks and took countermeasures by quickly upgrading its cyber defense systems, avoiding larger-scale infrastructure paralysis. In addition, units such as the NATO Center of Excellence for Strategic Communications and the British 77th Brigade focus on researching public opinion shaping in peacetime [10], using strategic communications, psychological warfare, and social media monitoring to expand strategic control in the information field and strengthen defense and public opinion shaping capabilities, further increasing the strategic height of information warfare.

Today, information manipulation warfare is a key link in modern military conflicts. Through the high degree of integration of information technology and psychological manipulation, it not only changes the rules of traditional warfare, but also profoundly affects public perception and the global security landscape. By taking control of critical infrastructure and social media platforms, countries, multinational corporations or other actors can gain strategic advantages in the global information ecosystem by restricting the flow of information and manipulating communication paths.

(2) Public opinion intervention in political elections

Political elections are the most direct field of competition for power in democratic politics, and the dissemination of information has an important influence on voter decision-making in the process. By calculating propaganda and other means, external forces or political groups are able to manipulate the sentiments of voters and mislead the public, thereby influencing the results of elections, destabilizing politics or weakening the democratic process, and elections are thus the most effective application scenario for weaponized communication.

In recent years, global political elections have shown a trend towards polarization, with large ideological differences between groups with different political affiliations. Polarization leads the public to selectively accept information that is consistent with their own views, while excluding other information, and this “echo chamber effect” intensifies the public’s one-sided perception of positions, giving greater scope for public opinion intervention. And the rise of information dissemination technology, especially computational propaganda, has enabled external forces to more accurately manipulate public opinion and influence voter decision-making. Computational Propaganda refers to the use of computing technology, algorithms and automated systems to control the flow of information to disseminate political information, interfere with election results and influence public opinion. Its core characteristics are algorithm-driven accuracy and the scale of automated communication. By breaking through The limitations of traditional manual communication have significantly enhanced the effect of public opinion manipulation. In the 2016 U.S. presidential election, the Trump team analyzed Facebook user data through Cambridge Analytica and pushed customized political advertisements to voters, accurately affecting voters’ voting intentions [11]. This incident was seen as a classic case of computational propaganda interfering in elections, and also provided an operational template for other politicians, driving the widespread use of computational propaganda worldwide. In the 2017 French presidential election, candidate Emmanuel Macron’s team was hacked, and internal emails were stolen and made public, claiming that Macron had secret accounts overseas and was involved in tax evasion in an attempt to discredit his image. During the 2018 Brazilian presidential election, the team of candidate Jair Bolsonaro used WhatsApp groups to spread inflammatory political content, targeting and pushing a large number of images, videos and inflammatory messages to influence voter sentiment. According to statistics, from 2017 to 2019, the number of countries using computing for propaganda worldwide increased from 28 to 70, and in 2020 this number rose to 81. This suggests that computational propaganda is redefining the rules of public opinion in global elections through technical means and communication strategies.

Computational propaganda is also an important tool for state actors in the war of public opinion intervention. In 2011, the U.S. Defense Advanced Research Projects Agency (DARPA) launched Operation “Voice of Ernest” in the Middle East to distort conversations on Arabic-language social media by establishing and managing multiple false identities (sockpuppets). Russia also frequently uses computational propaganda to intervene, operating about 200,000 social media accounts in Canada, using far-right and far-left movements to spread pro-Russian rhetoric, create false social hot spots, and try to undermine Canada’s support for Ukraine [12]. As an important part of computing propaganda, social robots create the heat of public opinion through automation and scale, increase the exposure of information on social platforms through specific tags, and control the priority of issues. During the 2016 U.S. election, Russia used social robots to post content supporting Putin and attacking the opposition, covering up the opposition’s voice through information overload, and strengthening the pro-Putin public opinion atmosphere. [ 13] During the 2017 Gulf crisis, Saudi Arabia and Egypt used Twitter bots to create anti-Qatar hashtags#AlJazeeraInsultsKingSalman, which made it a hot topic and fictionalized the peak of anti-Qatar sentiment, which in turn affected global public opinion attitudes towards Qatar. [ 14] Deepfake technology further improves the accuracy and concealment of computing propaganda. In 2024, a fake video of U.S. President Joe Biden went viral on X (formerly Twitter), showing him using offensive language in the Oval Office, sparking controversy in public opinion and influencing voter sentiment. According to a survey by cybersecurity firm McAfee, 63% of respondents had watched a political deepfake video within two months, and nearly half said the content influenced their voting decisions.[ 15]

Globally, computing propaganda has infiltrated public opinion wars in various countries, affecting social stability and national security. The Israel Defense Forces waged a public opinion war against Palestine through digital weapons, Turkey cultivated “a patriotic troll army” to manipulate public opinion at home and abroad, and the Mexican government used botnets to influence public opinion. Computational propaganda is changing the landscape of global political communication as an important means of modern public opinion intervention warfare. With the development of technologies such as artificial intelligence and quantum computing, computing propaganda may also interfere with electoral processes through more covert and efficient means, or even directly threaten the core operating logic of democratic institutions.

(3) Symbolic identity war in the cultural field

Weaponized communication attempts to influence the public’s thoughts, emotions, and behaviors by manipulating information, symbols, and values, which in turn shapes or changes society’s collective cognition and cultural identity. This mode of communication consists not only in the transmission of information, but also in promoting the transmission and identification of a specific ideological or political idea through a specific narrative framework, cultural symbols and emotional resonance. Through the manipulation of cultural symbols, social emotions and collective memory, weaponized communication interferes with social structure and cultural identity in the cultural field, becoming a core means of symbolic identity warfare.

Memes, as a cultural symbol that combines visual elements and concise words, stimulate the emotional response of the audience in a humorous, satirical or provocative way, affecting their political attitudes and behaviors. Pepe the Frog began as a harmless comic book character that was repurposed and weaponized by far-right groups to spread hate speech, gradually evolving into a racist and anti-immigrant symbol. Memes transform complex political sentiments into easy-to-spread visual symbols that quickly stir up public distrust and anger over policy, seen as “weaponized iconoclastic weaponization” (Iconoclastic Weaponization). This process, by manipulating cultural symbols in order to achieve the purpose of political or social struggle [16], aggravates the public’s division of society and politics. For example, during Brexit, memes bearing the words “Take Back Control” Take Back Control spread rapidly, reinforcing nationalist sentiments.

In addition to the manufacture of cultural symbols, the screening and shielding of symbols are equally capable of shaping or deepening a certain cultural identity or political stance. Censorship has been an important means for power to control information since ancient times, and as early as the ancient Greek and Roman periods, governments censored public speeches and literary works to maintain social order and power stability. Entering the digital age, the rise of the Internet and social media has driven the modernization of censorship, and platform censorship has gradually replaced traditional censorship methods as a core tool for contemporary information control and public opinion guidance. Algorithm review detects sensitive topics, keywords, and user behavior data through artificial intelligence, automatically deletes or blocks content deemed “violations”, and the review team of social media manually screens user-generated content to ensure its compliance with platform policies and laws and regulations. The role of platform censorship is not only to limit the dissemination of certain content, but also to guide public opinion and shape the public perception framework through push, deletion and blocking. Although mainstream social platforms control the spread of information through strict content moderation mechanisms, some edge platforms such as Gab, Gettr, Bitchute, and others have become hotbeds of extreme speech and malicious information due to the lack of effective censorship. These platforms do not place sufficient restrictions on content publishing, allowing extreme views and disinformation to spread wantonly. For example, Gab has been repeatedly criticized for its extremist content and is accused of promoting violence and hatred. In the “echo chamber”, users can only access information that is consistent with their own views. This information environment further strengthens extreme ideas and leads to increased antagonism among social groups.[ 17]

Language, as a carrier and tool for information dissemination, can profoundly influence group behavior and cultural identity through emotional manipulation, symbolic politics, and social mobilization. The weaponization of language focuses on how language forms and cultural contexts affect the way information is received, emphasizing how language can be used to manipulate, guide or change people’s cognition and behavior. This involves not only the use of specific lexical and rhetorical devices, but also the construction of specific social meanings and cultural frameworks through linguistic representations. As another important tool of symbolic identity warfare, language shapes the narrative framework “of antagonism between the enemy and the enemy”. The Great Translation Movement spread the nationalist rhetoric of Chinese netizens to international social media platforms through selective translation, triggering negative perceptions of China. This language manipulation amplifies controversial content through emotional expression and deepens the cultural bias of the international community.

The deep logic of the weaponization of language lies in emotional and inflammatory forms of language. Western countries often justify acts of intervention by using the labels of justice such as “human rights” and “democracy”, legitimizing political or military action. White supremacists reshape ideologies using vague labels such as “alt-right”, transforming traditional “white supremacist” with strongly negative connotations into a more neutral concept, reducing the vocabulary’s social resistance, broadening the base of its supporters with a broad “umbrella” identity. Through the infiltration of secular discourse, hate politics and extreme speech are justified, gradually creating a political normality. Language is truly weaponized after the public routineizes this politics.[ 18] In Nigeria, hate-mongering content spreads through racial, religious and regional topics, profoundly deteriorating social relations. [ 19] Linguistic ambiguity and reasonable denial strategies have also become powerful tools for communicators to circumvent their responsibilities and spread complex social and political issues in simplified narratives. Through negative labeling and emotional discourse, Trump’s America First policy deliberately puts forward views that are opposed to mainstream opinions by opposing globalization, questioning climate change science, and criticizing traditional allies, stimulating public distrust of globalization, reshaping the cultural identity of national interests first. [ 20]

III Risks and challenges of weaponized dissemination: legitimacy and destructiveness

Although weaponized communication poses a great risk to the international public opinion landscape, it may be given some legitimacy by certain countries or groups through legal, political or moral frameworks in specific situations. For example, after the “9/11” incident, the United States passed the Patriot Act to expand the surveillance authority of intelligence agencies and implement extensive information control in the name of “anti-terrorism”. This “legitimacy” is often criticized as undermining civil liberties and eroding the core values of democratic society.

In the international political game, weaponized transmission is more often seen as a means of “Gray Zone” (Gray Zone). Confrontations between countries are no longer limited to economic sanctions or diplomatic pressure, but are waged through non-traditional means such as information manipulation and social media intervention. Some States use “the protection of national interests” as a pretext to disseminate false information, arguing that their actions are compliant and, although they may be controversial under international law, are often justified as necessary means “to counter external threats”. In some countries where the regulation of information lacks a strict legal framework, interference in elections is often tolerated or even seen as a “justified” political exercise. At the cultural level, certain countries attempt to shape their own cultural influence on a global scale by disseminating specific cultural symbols and ideologies. Western countries often promote the spread of their values in the name of “cultural sharing” and “communication of civilizations”, but in actual operations, they weaken the identity of other cultures by manipulating cultural symbols and narrative frameworks, leading to global cultural ecology. imbalance. The legal framework also provides support, to a certain extent, for the justification of weaponized dissemination. In the name of “counter-terrorism” and “against extremism”, some countries restrict the dissemination of so-called “harmful information” through information censorship, content filtering and other means. However, this justification often pushes moral boundaries, leading to information blockades and suppression of speech. Information governance on the grounds of “national security”, although internally recognized to a certain extent, provides space for the proliferation of weaponized communications.

Compared to legitimacy, the spread of weaponization is particularly devastating. At present, weaponized communication has become an important tool for power structures to manipulate public opinion. It not only distorts the content of information, but also profoundly affects public perception, social emotions, and international relations through privacy violations, emotional mobilization, and cultural penetration.

(1) Information distortion and cognitive manipulation

Distortion of information means that information is deliberately or unintentionally distorted during dissemination, resulting in significant differences between what the public receives and the original information. On social media, the spread of disinformation and misleading content is rampant, and generated content from artificial intelligence models (such as GPT) may be exacerbated by bias in training data. Gender, race, or social bias may be reflected in automatically generated text, amplifying the risk of information distortion. The fast-spreading nature of social media also makes it difficult for traditional fact-checking mechanisms to keep up with the spread of disinformation. Disinformation often dominates public opinion in a short period of time, and cross-platform dissemination and anonymity complicate clarification and correction. The asymmetries in communication undermine the authority of traditional news organizations, and the public’s preference for trusting instantly updated social platform information over in-depth coverage by traditional news organizations further diminishes the role of news organizations in resisting disinformation.

In addition to the distortion of the information itself, weaponized communication makes profound use of the psychological mechanisms of cognitive dissonance. Cognitive dissonance refers to the psychological discomfort that occurs when an individual is exposed to information that conflicts with their pre-existing beliefs or attitudes. By creating cognitive dissonance, communicators shake the established attitudes of their target audience and even induce them to accept new ideologies. In political elections, targeted dissemination of negative information often forces voters to re-examine their political positions or even change their voting tendencies. Weaponized communication further intensifies the formation of “information cocoon houses” through selective exposure, allowing audiences to tend to access information consistent with their own beliefs, ignoring or rejecting opposing views. This not only reinforces the cognitive biases of individuals, but also allows disinformation to spread rapidly within the group, making it difficult to be broken by external facts and rational voices, and ultimately forming a highly homogeneous ecology of public opinion.

(2) Privacy leakage and digital monitoring

In recent years, the abuse of deepfakes has exacerbated the problem of privacy violations. In 2019, the “ZAO” face-changing software was removed from the shelves due to default user consent to portrait rights, revealing the risk of overcollection of biometric data. Photos uploaded by users that have been processed through deep learning can either generate an accurate face-changing video or become a source of privacy leaks. What’s more, techniques such as deepfakes are abused for gender-based violence, the faces of multiple European and American actresses are illegally planted with fake sex videos and widely distributed, and although the platforms remove this content in some cases, the popularity of open-source programs makes it easy for malicious users to copy and share forged content. In addition, when users use social media, they tend to authorize the platform by default to access their devices’ photos, cameras, microphones and other app permissions. Through these rights, the platform not only collects a large amount of personal data, but also analyzes users’ behavioral characteristics, interest preferences, and social relationships through algorithms, allowing it to accurately deliver ads, recommend content, and even implement information manipulation. This large-scale data acquisition drives global discussion of privacy protections. In Europe, the General Data Protection Regulation attempts to strengthen the protection of individuals’ right to privacy through strict regulations on data collection and use. However, due to “implicit consent” or complex user agreements, platforms often bypass regulations that make the data-processing process less transparent, making it difficult for regular users to understand what the data is actually used for. Section 230 of the U.S. Communications Decency Act provides that online platforms are not legally responsible for user-generated content, a provision that has fueled the development of content moderation on platforms but has also left them with little incentive to respond to privacy infringements. Platforms, motivated by commercial interests, often lag behind in dealing with disinformation and privacy issues, leading to ongoing shelving of audit responsibilities.

In terms of digital surveillance, social platforms work with governments to make user data a core resource “of surveillance capitalism”. The National Security Agency (NSA) implements mass surveillance through phone records, Internet communications, and social media data, and works with large enterprises such as Google and Facebook to obtain users’ online behavioral data for intelligence gathering and behavioral analysis worldwide. The abuse of transnational surveillance technologies is what pushes privacy violations to an international level. Pegasus spyware developed by the Israeli cybersecurity company NSO, which compromises target devices through “zero-click attacks”, can steal private information and communication records in real time. In 2018, in the case of the murder of Saudi journalist Jamal Khashoggi, the Saudi government monitored its communications through Pegasus, revealing the profound threat this technology poses to individual privacy and international politics.

(3) Emotional polarization and social division

Emotions play a key role in influencing individual cognition and decision-making. Weaponized communication influences rational judgment by inciting feelings of fear, anger, sympathy, etc., and pushes the public to react irrationally, driven by emotions. War, violence and nationalism often become the main content of emotional mobilization. Through carefully designed topics, communicators implant elements such as patriotism and religious beliefs into information dissemination, quickly arousing public emotional resonance. The widespread adoption of digital technologies, particularly the combination of artificial intelligence and social media platforms, further amplifies the risk of emotional polarization. The rapid spread of disinformation and extreme speech on the platform comes not only from the sharing behavior of ordinary users, but is also driven by algorithms. Platforms tend to prioritize the push of emotional and highly interactive content, which often contains inflammatory language and extreme views, thus exacerbating the spread of hate speech and extreme views.

Social media hashtags and algorithmic recommendations play a key role in emotional polarization. After the Charlie Hebdo incident, the #StopIslam hashtag became a communication tool for hate speech, with the help of which users posted messages of hatred and violent tendencies. During the 2020 presidential election in the United States, extreme political rhetoric and misinformation on social platforms were also amplified in a bitter partisan struggle. Through precise emotional manipulation, weaponized communication not only tears apart public dialogue, but also greatly affects the democratic process of society. Another particular extremist mobilization tactic is “Weaponized Autism”, where far-right groups use the technical expertise of autistic individuals to implement emotional manipulation. These groups recruit technically competent but socially challenged individuals, transforming them into enforcers of information warfare by giving them a false sense of belonging. These individuals, guided by extremist groups, are used to spread hate speech, carry out cyberattacks and promote extremism. This phenomenon reveals not only the deep-seated mechanisms of emotional manipulation, but also how technology can be exploited by extremist groups to serve the larger political and social agenda.[ 21]

(4) Information colonization and cultural penetration

“Weaponized Interdependence” theory Weaponized Interdependence Theory reveals how states use key nodes in political, economic, and information networks to exert pressure on other states. [ 22] Especially in the field of information, developed countries further consolidate their cultural and political advantages by controlling the implementation of information flows “information colonization”. Digital platforms became the vehicles of this colonial process, the countries of the Global South were highly dependent on Western-dominated technology platforms and social networks for information dissemination, and in sub-Saharan Africa, Facebook has become synonymous with “the Internet”. This dependence not only generates huge advertising revenues for Western businesses, but also has a profound impact on indigenous African cultures and values through algorithmic recommendations, especially in terms of gender, family, and religious beliefs, making cultural penetration the norm.

Digital inequality is another manifestation of information colonization. The dominance of developed countries in digital technology and information resources has increasingly marginalized countries of the South in the economic, educational and cultural fields. Palestine’s inability to effectively integrate into the global digital economy due to inadequate infrastructure and technological blockade both limits local economic development and further weakens its voice in global information dissemination. Through technological blockades and economic sanctions, the world’s major economies and information powers restrict other countries’ access to key technological and innovation resources, which not only hinders the development of science and technology in target countries, but also exacerbates the rupture of the global technology and innovation ecosystem. Since withdrawing from the Iran Nuclear Deal in 2018, U.S. economic sanctions on Iran have blocked its development in the semiconductor and 5G sectors, and the asymmetry between technology and innovation has widened the gap in the global technology ecosystem, putting many countries at a disadvantage in information competition.

IV Reflection and discussion: the battle for the right to speak in the asymmetric communication landscape

In the competitive landscape of “Asymmetric Communication”, strong parties often dominate public opinion through channels such as mainstream media and international news organizations, while weak parties need to use innovative communication technologies and means to make up for their disadvantages and compete for the right to speak. At the heart of this communication landscape lies Information Geopolitics, the idea that the contest of power between states depends not only on geographical location, military power, or economic resources, but also on control over information, data, and technology. The game between the great powers is no longer limited to the control of physical space, but extends to the competition for public opinion space. These “information landscapes” involve the right to speak, information circulation and media influence in the global communication ecosystem. In this process, the country continuously creates landscapes to influence international public opinion and shape the global cognitive framework, thereby achieving its strategic goals. The strategy of asymmetric communication is not only related to the transmission of information content, but more importantly, how to bridge the gap between resources and capabilities with the help of various communication technologies, platforms and means. The core of information communication is no longer limited to the content itself, but revolves around the right to speak. The competition unfolds. With the rise of information warfare and cognitive warfare, whoever has the information will have a head start in global competition.

(1) Technology catching up under the advantage of latecomers

Traditional large countries or strong communicators control the dominance of global public opinion, and by contrast, weak countries often lack communication channels to compete with these large countries. The theory of latecomer advantage advocates that latecomer countries can rapidly rise and circumvent inefficient and outdated links in early technological innovation by leaping forward and bypassing traditional technological paths and introducing existing advanced technologies and knowledge. In the context of weaponized communication, this theory provides information-weak countries with a path to break through the barriers of communication in large countries through emerging technologies, helping them to catch up at the technical level. Traditional media are often constrained by resources, influence and censorship mechanisms, with slow dissemination of information, limited coverage and vulnerability to manipulation by specific countries or groups. The rise of digital media has brought about a fundamental change in the landscape of information dissemination, enabling disadvantaged countries, with the help of globalized Internet platforms, to directly target international audiences without having to rely on traditional news organizations and mainstream media. Through emerging technologies, disadvantaged countries can not only transmit information more precisely, but also rapidly expand their influence in international public opinion through targeted communication and emotional guidance. Later-developing countries can use advanced technologies (such as big data, artificial intelligence, 5G networks, etc.) to achieve precise information dissemination and create efficient communication channels. Taking “big data analysis” as an example, latecomer countries can gain an in-depth understanding of audience needs and public opinion trends, quickly identify the pulse of global public opinion, implement targeted communication, and quickly expand international influence. AI technology not only predicts the direction of public opinion development, but also optimizes communication strategies in real time. The popularization of 5G networks has greatly improved the speed and coverage of information dissemination, allowing latecomer countries to break through the limitations of traditional communication models in a low-cost and efficient manner and form unique communication advantages.

Through transnational cooperation, late-developing countries can integrate more communication resources and expand the breadth and depth of communication. For example, Argentina has established “Latin American News Network” with other Latin American countries to push Latin American countries to speak with a single voice in international public opinion and counter the single narrative of Western media through news content sharing. In Africa, South Africa has partnered with Huawei to promote the “Smart South Africa” project to build a modern information infrastructure and promote digital transformation and efficiency improvements in public services. Governments of late-developing countries should invest more in technological research and development and innovation, and encourage the development of local enterprises and talent. At the same time, attention should be paid to the export of culture and the construction of the media industry, so as to enhance the country’s voice in the international information space through globalized cooperation and decentralized communication models. Governments can fund digital cultural creations, support the growth of local social media platforms, and integrate more communication resources through an international cooperation framework.

(2) Construction of barriers in information countermeasures

Unlike a full-scale conflict that may be triggered by military action, or the risks that economic sanctions may pose, weaponized dissemination is able to achieve strategic objectives without triggering full-scale war, and it is extremely attractive based on cost and strategic considerations. Because weaponized communication is characterized by low cost and high returns, an increasing number of State and non-State actors have chosen to manipulate information in order to reach strategic objectives. The spread of this means of dissemination makes countries face even more complex and variable threats in the face of attacks involving information from outside and inside. With the increasing intensity of information warfare, mere traditional military defense can no longer meet the needs of modern warfare. Instead, building a robust information defense system becomes a key strategy for the country to maintain political stability, safeguard social identity, and enhance international competitiveness. Therefore, how to effectively deal with external interference in information and manipulation of public opinion, as well as counter-information, has become an urgent issue for all countries to address. A complete cybersecurity infrastructure is key to maintaining national security against the manipulation or tampering of sensitive information from outside. Take, for example, the European Union’s push to strengthen cybersecurity in member states through its “Digital Single Market” strategy, which requires internet companies to be more aggressive in dealing with disinformation and external interference. The EU’s cybersecurity directives also provide for member states to establish emergency response mechanisms to protect critical information infrastructure from cyberattacks. In addition, the EU has established cooperation with social platform companies, such as Facebook, Twitter and Google, to combat the spread of fake news by providing anti-disinformation tools and data analysis technologies. Artificial intelligence, big data, and automation technologies are becoming important tools for information defense, used to monitor information propagation paths in real time, identify potential disinformation, and resist public opinion manipulation. In the field of cybersecurity, big data analysis helps decision makers identify and warn against malicious attacks, and optimize countermeasures. The application of these technologies will not only enhance information defence capabilities at the domestic level, but also enhance national initiative and competitiveness in the international information space.

Counter-mechanisms are another important component of the information defence system, especially under pressure from international public opinion, where real-time monitoring of the spread of external information and timely correction of disinformation become key to safeguarding the initiative of public opinion. Since the 2014 Crimean crisis, Ukraine has built a rather large-scale cyber defense system through cooperation with NATO and the United States. Ukraine’s National Cyber Security Service has set up “information countermeasures teams” to counter cyberthreats, using social media and news release platforms to refute false Russian reports in real time, a tactic that has significantly boosted Ukraine’s reputation and trust in international public opinion.

(3) Agenda setting in public opinion guidance

In the global competitive landscape of informatization and digitalization, public opinion guidance involves not only the content of information dissemination, but more importantly, how to set the agenda and focus on hot topics of global concern. The agenda-setting theory suggests that whoever can take control of the topics of information circulation can guide the direction of public opinion. Agenda setting influences public attention and evaluation of events by controlling the scope and focus of discussion of topics, and the rise of social media provides a breakthrough for information-disadvantaged countries to compete for dominance in information dissemination through multi-platform linkage. In the case of Ukraine, for example, during the Russo-Ukrainian War, it disseminated the actual war situation through social media, not only publishing the actual combat situation, but also incorporating the emotional demands of the people, and using the tragic narrative of civilian encounters and urban destruction to inspire sympathy and attention from the international community. While resisting interference from external information, the State also needs to proactively disseminate positive narratives and tell cultural stories that can resonate with the international community. The story should correspond to the emotional needs of international public opinion, while at the same time showing the uniqueness of the country and strengthening the link with the international community. Taking my country’s “One Belt, One Road” co-construction as an example, in the “One Belt, One Road” co-construction country, my country has invested in and constructed a large number of infrastructure projects. These projects not only helped improve local economic basic conditions, but also demonstrated China’s globalization process. Responsibility provides a window for cultural cooperation and exchange activities, showing the rich history and culture of the Chinese nation to the world It has demonstrated the inclusiveness and responsibility of Chinese culture to the international community.

However, because countries of the Global South often face constraints in terms of resources, technology and international communication platforms, and have difficulty in competing directly with developed countries, they rely on more flexible and innovative means of communication to participate in the setting of the global agenda. For example, Brazil is under negative public opinion pressure from the Western media when it comes to dealing with issues of environmental protection and climate change, especially the deforestation of the Amazon forest. To this end, the Brazilian government actively creates the country’s image in the field of environmental protection by using social media to publish recent data and success stories about Amazon protection. At the same time, Brazil has strengthened its voice on climate issues by engaging with other developing countries in global climate change negotiations and promoting South-South cooperation. Large international events, humanitarian activities and the production of cultural products, among others, are also effective ways of telling national stories. International sports events such as the World Cup and the Olympic Games are not only a display platform for sports competitions, but also an exhibition venue for national image and cultural soft power. By hosting or actively participating in these global events, the country can show its strength, value and cultural charm to the world, promoting a positive public opinion agenda.

“War is nothing more than the continuation of politics through another means”[23]. This classic Clausewitz assertion is modernized in the context of weaponized communication. Weaponized communication breaks through the physical boundaries of traditional warfare and becomes a modern strategic means of integrating information warfare, cognitive warfare, and psychological warfare. It manipulates the flow of information and public perception in a non-violent form, so that State and non-State actors can achieve political goals without relying on direct military action, reflecting a highly strategic and targeted nature. By manipulating information, emotions and values, weaponized communication can achieve strategic goals while avoiding all-out war, and in global competition and conflict, it has become an important means of political suppression by powerful countries against weak ones.

The core of weaponized communication lies in weakening the enemy’s decision-making and operational capabilities through information manipulation, but its complexity makes the communication effect difficult to fully predict. Although information-powerful countries suppress information-weak countries through technological advantages and communication channels, the effectiveness of communication is uncertain. Especially in the context of the globalization of social media and digital platforms, the boundaries and effects of information flow are becoming increasingly difficult to control. This complexity offers the weak countries the opportunity to break through the hegemony of discourse and promote the reverse game of information dissemination. Weak countries can use these platforms to launch confrontations, challenge the information manipulation of powerful countries, and take their place in global public opinion. The asymmetric game reflects the dynamic balance of international public opinion, whereby communication is no longer one-way control, but more complex interaction and dialogue, giving the weak the possibility of influencing public opinion. The current international public opinion landscape is still dominated by the one-way suppression of information-weak countries by information-powerful countries, but this situation is not unbreakable. Information warfare has a high degree of asymmetry, and information-weak countries can counter it step by step with technological innovation, flexible strategies and transnational cooperation. By exerting “asymmetric advantages”, weak countries are not only able to influence global public opinion, but also to enhance their voice with the help of joint action and information-sharing. Transnational cooperation and the establishment of regional alliances provide the weak countries with a powerful tool to counter the powerful, enabling them to form a synergy in international public opinion and challenge the dominance of the information powers. Under the “war framework”, countries can flexibly adjust their strategies and proactively shape the information dissemination pattern, rather than passively accepting information manipulation by powerful countries.

Sociology of war emphasizes the role of social structure, cultural identity, and group behavior in warfare. Weaponized communication is not only a continuation of military or political behavior, but also profoundly affects the psychosocial, group emotions, and cultural identity. Powerful countries use information dissemination to shape other countries’ perceptions and attitudes in order to achieve their own strategic goals. However, from a sociological perspective, weaponized transmission is not a one-way suppression, but rather the product of complex social interactions and cultural responses. In this process, the information-weak countries are not completely vulnerable, but, on the contrary, they can counter external manipulation with “soft power” with the help of cultural communication, social mobilization and dynamic confrontation of global public opinion, shaping a new collective identity and demonstrating the legitimacy of “weak weapons”.

(Fund Project: Research results of the National Social Science Fund Major Project to Study and Interpret the Spirit of the Third Plenary Session of the 20th Central Committee of the Communist Party of China “Research on Promoting the Integrated Management of News Publicity and Online Public Opinion” (Project No.: 24ZDA084))

現代國語:

作者:

郭小安 康如诗来源:

发布时间:

2025-05-06

【摘要】在國際輿論戰中,武器化傳播已滲透軍事、經濟、外交等領域,帶來“一切皆可武器化”的想像與實踐。武器化傳播通過技術、平台和政策操控公眾認知,體現了權力分配與文化博弈的複雜互動。在全球化和數字化的推動下,認知操控、社會分裂、情感極化、數字監控、信息殖民已成為影響國家穩定的新型手段,這不僅加劇了信息強國與弱國間的競爭,也為信息弱國提供了通過靈活策略和技術創新實現逆轉的機會。在全球非對稱傳播格局下,如何在技術創新與倫理責任、戰略目標與社會平衡間找到契合點和平衡點,將是影響未來國際輿論格局的關鍵要素。

【關鍵詞】輿論戰;武器化傳播;信息操縱;非對稱傳播;信息安全

如果說“宣傳是對現代世界的理性認可”[1],那麼武器化傳播則是對現代技術手段的理性應用。在輿論戰中,各參與主體通過不同傳播手段實現戰略目標,做到表面合理且隱蔽。與傳統軍事衝突不同,現代戰爭不僅涉及物理對抗,還涵蓋信息、經濟、心理及技術等多個領域的競爭。隨著技術進步和全球化的推動,戰爭形態發生深刻變化,傳統的物理對抗逐漸轉向多維度、多領域的綜合作戰。在這一過程中,武器化傳播作為一種現代戰爭形式,成為通過控制、引導和操縱輿論,影響敵對方或目標受眾的心理、情感與行為,進而實現政治、軍事或戰略目的的隱形暴力手段。 《戰爭論》認為,戰爭是讓敵人無力抵抗,且屈從於我們意志的一種暴力行為。 [2]在現代戰爭中,這一目標的實現不僅依賴於軍事力量的對抗,更需要信息、網絡與心理戰等非傳統領域的支持。第六代戰爭(Sixth Generation Warfare)預示戰爭形態的進一步轉變,強調人工智能、大數據、無人系統等新興技術的應用,以及信息、網絡、心理和認知領域的全面博弈。現代戰爭的“前線”已擴展到社交媒體、經濟制裁和網絡攻擊等層面,要求參與者俱備更強的信息控制與輿論引導能力。

當前,武器化傳播已滲透到軍事、經濟、外交等領域,帶來“一切皆可武器化”的憂慮。在戰爭社會學中,傳播被視為權力的延伸工具,信息戰爭深刻滲透並伴隨傳統戰爭。武器化傳播正是在信息控制的框架下,通過塑造公眾認知與情感,鞏固或削弱國家、政權或非國家行為者的權力。這一過程不僅發生在戰時,也在非戰斗狀態下影響著國家內外的權力關係。在國際政治傳播中,信息操控已成為大國博弈的關鍵工具,各國通過傳播虛假信息、發動網絡攻擊等手段,試圖影響全球輿論和國際決策。輿論戰不僅是信息傳播的手段,更涉及國家間權力博弈與外交關係的調整,直接影響國際社會的治理結構與權力格局。基於此,本文將深入探討武器化傳播的概念流變,分析其背後的社會心態,闡述具體的技術手段及所帶來的風險,並從國家層面提出多維應對策略。

一、從傳播武器化到武器化傳播:概念流變及隱喻

武器在人類歷史上一直是戰爭的象徵和工具,戰爭則是人類社會中最極端、暴力的衝突形式。因此,“被武器化”是指將某些工具用於戰爭中的對抗、操控或破壞,強調這些工具的使用方式。 “武器化”(weaponize)譯為“使得使用某些東西攻擊個人或團體成為可能”。 1957年,“武器化”一詞作為軍事術語被提出,V-2彈道導彈團隊的領導者沃納·馮·布勞恩表示,他的主要工作是“將軍方的彈道導彈技術‘武器化’”[3]。

“武器化”最早出現在太空領域,時值美蘇軍備競賽時期,兩個大國力圖爭奪外太空主導權。 “太空武器化”是指將太空用於發展、部署或使用軍事武器系統的過程,包括衛星、反衛星武器和導彈防禦系統等,目的是進行戰略、戰術或防禦性行動。 1959年至1962年,美蘇提出了一系列倡議,禁止將外太空用於軍事目的,尤其是禁止在外層空間軌道部署大規模毀滅性武器。 2018年,當時的美國總統特朗普簽署了《空間政策指令-3》,啟動“太空軍”建設,將太空視為與陸地、空中、海洋同等的重要作戰領域。 2019年,《中華人民共和國和俄羅斯聯邦關於加強當代全球戰略穩定的聯合聲明》中倡議“禁止在外空放置任何類型武器”[4]。

除太空領域的武器化外,軍事、經濟、外交等領域也顯現武器化趨勢。 “軍事武器化”是將資源(如無人機、核武器等)用於軍事目的、部署武器系統或發展軍事能力。 2022年俄烏戰爭期間,英國皇家聯合軍種研究所的報告顯示,烏克蘭每月因俄羅斯干擾站的影響,損失約10000架無人機。 [5]“武器化”也常出現在“金融戰爭”“外交戰場”等表述中。在經濟領域,武器化通常指國家或組織對全球金融系統中的共享資源或機制的利用;外交武器化則表現為國家通過經濟制裁、外交孤立、輿論操控等手段,追求自身利益並對他國施加壓力。隨著時間的推移,“武器化”概念逐漸擴展到政治、社會、文化等領域,尤其在信息領域,自2016年美國總統大選以來,輿論操縱已成為政治鬥爭的普遍工具。美國前中央情報局局長戴維·彼得雷烏斯曾在國家戰略研究所會議上表示,“萬物武器化”(the weaponization of everything)的時代已經來臨。 [6]

作為一種隱喻,“武器化”不僅指實際物理工具的使用,還像徵著對抗性和攻擊性行為的轉化,強調“武器”這一概念如何滲透至日常生活、文化生產和政治策略中,展現社會行動者如何利用各種工具達成戰略目的。時下,許多本應保持中立的領域,如媒體、法律和政府機構,常被描述為“武器化”,用以批判它們的過度政治化和被不正當利用,突出其非法性及對社會的負面影響。通過這一隱喻,人們無意識地將當前的政治環境與理想化的、看似更溫和的過去進行對比,使人們認為過去的政治氛圍更加理性和文明,而現今則顯得過於極端和對立。 [7]因此,“武器化”的實質是政治中介化的過程,是政治力量通過各種手段和渠道,影響或控製本應保持中立的領域,使其成為政治目的和政治鬥爭的工具。

在信息領域,傳播武器化是長期存在的一種戰略手段。第一、二次世界大戰期間,各國就廣泛使用了宣傳和輿論戰,傳播手段被作為一種心理戰術使用。武器化傳播是傳播武器化在現代信息社會中的體現,其利用算法和大數據分析精準地控制信息的傳播速度和範圍,進而操控輿論和情感,反映了技術、平台和策略的結合,使得政治力量可以更加精準和高效地操控公眾認知與輿論環境。信息作為輿論的本體,被“武器化”並用於影響社會認知和群體行為,“戰爭”的概念也隨之變化,不再只是傳統的軍事對抗,還包括通過信息傳播和輿論操控實現的心理戰和認知戰。這種轉變促生了一系列新術語,例如無限制戰爭(unrestricted warfare)、新一代戰爭(new generation warfare)、非對稱戰爭(asymmetric warfare)和非常規戰爭(irregular warfare)等。這些術語幾乎都藉用“戰爭”(warfare)強調信息領域中的多樣化衝突,信息成為被“武器化”的核心內容。

儘管有部分觀點認為“戰爭”一詞不適用於未正式宣布敵對行動的情況[8],但武器化傳播通過弱化戰爭的傳統政治屬性,將各領域的公開或隱蔽的力量和形式籠統地視作傳播行為,從而擴展了“戰爭”這一概念的外延。值得注意的是,在英文術語中“武器化”有兩種表述方式:一種是“weaponized noun(名詞)”,即表示某物已經“被武器化”,具備武器功能或用途;另一種是“weaponization of noun”,指將某物轉化為武器或具有武器性質的過程。在學術領域,儘管weaponized communication和weaponization of communication尚未嚴格區分,但中文翻譯有所區別。 “武器化傳播”更側重於傳播手段或信息本身“被武器化”,以實現某種戰略目標;“傳播武器化”則強調傳播過程本身作為武器的轉化過程。在討論具體技術手段時,多數學術論文采用weaponed或weaponizing作為前綴,以修飾具體的傳播手段。

本文重點討論的是國際輿論戰中的具體傳播策略,著重描述已經發生的武器化現象,故統一使用“武器化傳播”,其是一種利用傳播手段、技術工具和信息平台,通過精確操控信息流動、公眾認知與情感反應,達到特定軍事、政治或社會目的的策略性傳播方式。武器化傳播也並非單純的戰爭或戰時狀態,而是一種持續的傳播現象,它反映了各主體間的互動與博弈,是信息共享和意義空間的流動。

二、武器化傳播的應用場景及實施策略

如果說20世紀90年代末,信息領域的武器化仍是一個“死話題”,各國主要追逐導彈、無人機等實體武器的升級競賽,那麼步入21世紀,網絡戰爭則真正衝進了公眾視野,並深刻嵌入人們的日常生活,經由社交媒體和智能設備,公眾不可避免地捲入輿論戰爭,不自覺地成為參與者或傳播節點。隨著技術的普及,武器化手段逐漸從國家主導的戰爭工具擴展到社會化和政治化領域,對個人和社會的控制從顯性的國家機器轉向更隱蔽的觀念操控。棱鏡計劃(PRISM)的曝光引發了全球對隱私洩露的強烈擔憂,凸顯了國家利用先進技術進行監視和控制的潛力,這被視為一種新型的武器化。自2016年特朗普當選美國總統以來,社交機器人等信息武器的大規模應用,成為全球政治博弈中的常見現象。信息作戰——包括電子戰、計算機網絡作戰、心理戰和軍事欺騙——被廣泛用於操控信息流動,影響輿論格局。這些手段不僅在軍事戰爭和政治選舉中發揮作用,還逐漸滲透到文化衝突、社會運動及跨國博弈之中,傳統的信息作戰邏輯得以延續。如今,武器化傳播作為一種社會政治工具,深刻影響著輿論生態、國際關係以及個人的日常生活。

(一)軍事領域的信息操縱戰

信息流能夠直接影響軍事衝突的走向,塑造公眾和軍隊的認知與決策,進而影響士氣、戰略判斷和社會穩定。在現代戰爭中,信息不再是單純的輔助工具,信息領域已成為核心戰場。通過操控信息流向,敵方的形勢評估可能被誤導,戰鬥意志被削弱,民眾的信任與支持被動搖,進而影響戰爭的決策過程與持續性。

海灣戰爭(Gulf War)被視為現代信息戰的開端。在這場戰爭中,美國通過高科技手段——包括電子戰、空中打擊和信息操作——實施了對伊拉克的系統性打擊。美軍利用衛星和AWACS預警機實時監控戰場態勢,通過空投傳單和廣播電台向伊拉克士兵傳遞美軍優勢及投降後的優待政策,從心理層面誘使伊軍投降。這場戰爭標誌著信息控制在軍事衝突中的關鍵地位,展示了信息戰在現代戰爭中的潛力。進入21世紀,網絡戰成為信息戰的重要組成部分。網絡戰不僅涉及信息的傳播和操控,還包括通過攻擊關鍵基礎設施實現對敵方社會功能的控制。 2007年愛沙尼亞遭遇大規模DDoS(Distributed Denial of Service Attack)攻擊,展示了信息操縱與網絡攻擊融合的趨勢。 2017年在WannaCry勒索軟件事件中,攻擊者利用Windows系統漏洞(EternalBlue)加密全球150個國家約20萬台計算機文件,要求支付贖金,嚴重影響英國國家健康服務體系(NHS),導致急診服務中斷和醫院系統癱瘓,進一步揭示了網絡戰對關鍵基礎設施的威脅。此外,在長期衝突中,基礎設施控制因能夠直接決定信息傳播的速度、範圍和方向,被廣泛用於削弱對手的戰略能力,爭奪公共信息空間。以色列通過限制無線電頻譜使用、控制互聯網帶寬和破壞通信設施,有效削弱了巴勒斯坦的通信能力。同時,以色列還通過經濟制裁和法律框架限制巴勒斯坦電信市場的發展,壓制巴勒斯坦在信息流動中的競爭力,鞏固自身在衝突中的戰略優勢[9],以維持信息的不平等流動。

社交媒體為信息操縱提供了即時、廣泛的信息傳播渠道,使其能夠跨越國界,影響全球公眾情緒和政治局勢,也使戰爭焦點從單純的物理破壞轉向輿論操控。俄烏戰爭期間,深度偽造技術作為視覺武器,對公眾認知和戰爭輿論產生了顯著干擾。 2022年3月15日,烏克蘭總統澤連斯基的偽造視頻在Twitter上傳播,視頻中他“呼籲”烏克蘭士兵放下武器,引發了短時間內的輿論混亂。同樣,俄羅斯總統普京的偽造視頻也被用以混淆視聽。儘管這些視頻被平台迅速標註“Stay informed”(等待了解情況)的說明,但其在短時間內仍然對公眾情緒和認知造成明顯干擾。這些事件凸顯了社交媒體在現代信息戰中的關鍵作用,國家和非國家行為體可以通過虛假信息、情感操控等手段對軍事衝突施加干擾。

信息操縱戰的複雜性還體現在其雙重特性上——既是攻擊工具,也是防禦的手段。在軍事領域,各國通過防禦和反擊網絡攻擊來確保國家安全、保護關鍵基礎設施、維護軍事機密,並在某些情況下影響對手的戰鬥力與決策。 2015年和2017年,俄羅斯黑客發起了針對烏克蘭的大規模網絡攻擊(如BlackEnergy和NotPetya),烏克蘭通過迅速升級網絡防禦系統,成功抵禦部分攻擊並採取反制措施,避免了更大規模的基礎設施癱瘓。此外,北約戰略傳播卓越中心和英國第77旅等單位專注研究和平時期的輿論塑造[10],利用戰略傳播、心理戰和社交媒體監控等手段,擴大信息領域的戰略控制,並強化了防禦與輿論塑造能力,進一步提高了信息戰的戰略高度。

如今,信息操縱戰已經成為現代軍事衝突中的關鍵環節。通過信息技術與心理操控的高度結合,它不僅改變了傳統戰爭的規則,也深刻影響著公眾認知和全球安全格局。國家、跨國公司或其他行為體通過掌控關鍵基礎設施和社交媒體平台,限制信息流動、操控傳播路徑,從而在全球信息生態中獲得戰略優勢。

(二)政治選舉的輿論干預戰

政治選舉是民主政治中最直接的權力競爭場域,信息傳播在此過程中對選民決策具有重要影響。通過計算宣傳等手段,外部勢力或政治團體能夠操縱選民情緒、誤導公眾認知,從而左右選舉結果、破壞政治穩定或削弱民主進程,選舉因此成為武器化傳播最具效果的應用場景。

近年來,全球政治選舉呈現極化趨勢,持不同政治立場的群體之間存在巨大的意識形態差異。極化導致公眾選擇性接受與自身觀點一致的信息,同時排斥其他信息,這種“回音室效應”加劇了公眾對立場的片面認知,為輿論干預提供了更大的空間。而信息傳播技術,尤其是計算宣傳的興起,使外部勢力能夠更加精準地操控輿論和影響選民決策。計算宣傳(Computational Propaganda)指利用計算技術、算法和自動化系統操控信息流動,以傳播政治信息、干預選舉結果和影響輿論,其核心特徵在於算法驅動的精準性和自動化傳播的規模化,通過突破傳統人工傳播的限制,顯著增強了輿論操控的效果。 2016年美國總統選舉中,特朗普團隊通過劍橋分析公司分析Facebook用戶數據,為選民定向推送定制化的政治廣告,精準影響了選民的投票意向[11]。這一事件被視為計算宣傳干預選舉的典型案例,也為其他政客提供了操作模板,推動了計算宣傳在全球範圍內的廣泛應用。 2017年法國總統選舉中,候選人埃馬紐埃爾·馬克龍(Emmanuel Macron)團隊遭遇黑客攻擊,內部郵件被竊取並公開,內容稱馬克龍在海外擁有秘密賬戶並涉及逃稅,企圖抹黑其形象。 2018年巴西總統選舉期間,候選人雅伊爾·博索納羅(Jair Bolsonaro)團隊利用WhatsApp群組傳播煽動性政治內容,定向推送大量圖像、視頻和煽動性消息以影響選民情緒。據統計,自2017年至2019年,全球採用計算宣傳的國家由28個增加至70個,2020年這一數量上升至81個。這表明,計算宣傳正通過技術手段和傳播策略,重新定義全球選舉中的輿論規則。

計算宣傳也是國家行為者在輿論干預戰中的重要工具。 2011年,美國國防高級研究計劃局(DARPA)在中東地區開展“歐內斯特之聲”行動,通過建立和管理多個虛假身份(sockpuppets),扭曲阿拉伯語社交媒體的對話。俄羅斯也頻繁利用計算宣傳實施干預,在加拿大操作約20萬個社交媒體賬戶,借助極右翼和極左翼運動散佈親俄言論,製造虛假的社會熱點,試圖破壞加拿大對烏克蘭的支持[12]。作為計算宣傳的重要組成部分,社交機器人通過自動化和規模化手段製造輿論熱度,藉由特定標籤在社交平台上增加信息的曝光率,操控議題的優先級。 2016年美國大選期間,俄羅斯利用社交機器人發布支持普京和攻擊反對派的內容,通過信息過載(information overload)掩蓋反對派聲音,強化親普京的輿論氛圍。 [13]2017年海灣危機期間,沙特阿拉伯和埃及通過Twitter機器人製造反卡塔爾標籤#AlJazeeraInsultsKingSalman的熱度,使其成為熱門話題,虛構了反卡塔爾情緒的高峰,進而影響了全球範圍內對卡塔爾的輿論態度。 [14]深度偽造技術則進一步提升了計算宣傳的精準性與隱蔽性。 2024年,美國總統喬·拜登的偽造視頻在X(原Twitter)上迅速傳播,視頻顯示其在橢圓形辦公室使用攻擊性語言,引發輿論爭議並影響選民情緒。據網絡安全公司McAfee調查,63%的受訪者在兩個月內觀看過政治深度偽造視頻,近半數表示這些內容影響了他們的投票決定。 [15]

在全球範圍內,計算宣傳已滲透各國輿論戰中,影響著社會穩定與國家安全。以色列國防軍通過數字武器對巴勒斯坦展開輿論戰,土耳其培養了“愛國巨魔軍隊”操控國內外輿論,墨西哥政府利用殭屍網絡影響輿論。作為現代輿論干預戰的重要手段,計算宣傳正在改變全球政治傳播的格局。隨著人工智能、量子計算等技術的發展,計算宣傳還可能通過更隱蔽和高效的方式乾預選舉流程,甚至直接威脅民主制度的核心運行邏輯。

(三)文化領域的符號認同戰

武器化傳播通過操控信息、符號和價值觀,試圖影響公眾的思想、情感和行為,進而塑造或改變社會的集體認知與文化認同。這種傳播方式不僅在於信息的傳遞,更通過特定的敘事框架、文化符號和情感共鳴,推動某種特定的意識形態或政治理念的傳播與認同。通過操縱文化符號、社會情感和集體記憶,武器化傳播在文化領域干擾社會結構與文化認同,成為符號認同戰的核心手段。

模因(Meme)作為一種集視覺元素和簡潔文字於一體的文化符號,以幽默、諷刺或挑釁的方式激發觀眾的情感反應,影響他們的政治態度和行為。佩佩模因(Pepe the Frog)起初是一個無害的漫畫角色,被極右翼群體重新利用並武器化,用以傳播仇恨言論,逐漸演變為種族主義和反移民的象徵。模因將復雜的政治情緒轉化為便於傳播的視覺符號,迅速激起公眾對政策的不信任和憤怒,被視為“武器化的偶像破壞主義”(Iconoclastic Weaponization)。這一過程通過操控文化符號,以達到政治或社會鬥爭的目的[16],加劇了公眾對社會和政治的分裂。例如,在英國脫歐期間,帶有“Take Back Control”(奪回控制權)字樣的模因迅速傳播,強化了民族主義情緒。

除了文化符號的製造外,符號的篩选和屏蔽同樣能夠塑造或加深某種文化認同或政治立場。審查制度自古以來就是權力控制信息的重要手段,早在古希臘和古羅馬時期,政府就對公共演講和文學作品進行審查,以維持社會秩序和權力穩定。進入數字時代,互聯網和社交媒體的興起推動了審查制度的現代化,平台審查逐漸取代傳統的審查方式,成為當代信息控制和輿論引導的核心工具。算法審查通過人工智能檢測敏感話題、關鍵詞和用戶行為數據,自動刪除或屏蔽被視為“違規”的內容,社交媒體的審核團隊會對用戶生成的內容進行人工篩選,確保其符合平台政策和法律法規。平台審查的作用不僅是限制某些內容的傳播,更是通過推送、刪除和屏蔽等方式引導輿論,塑造公眾認知框架。儘管主流社交平台通過嚴格的內容審核機制控制信息傳播,但一些邊緣平台,如Gab、Gettr、Bitchute等因缺乏有效審查,成為極端言論和惡意信息的溫床。這些平台未對內容髮布做出足夠限制,極端觀點和虛假信息得以肆意擴散,例如,Gab因極端主義內容屢遭批評,被指助長暴力和仇恨。在迴聲室中,用戶只能接觸與自身觀點一致的信息,這種信息環境更強化了極端思想,導致社會群體間的對立加劇。 [17]

語言作為信息傳播的載體和工具,能夠通過情感操控、符號政治和社會動員等方式,深刻影響群體行為和文化認同。語言武器化聚焦於語言形式和文化語境如何影響信息的接收方式,強調語言如何被用來操控、引導或改變人們的認知與行為。這不僅涉及特定詞彙和修辭手法的使用,更包括通過語言表述建構特定的社會意義和文化框架。作為符號認同戰的另一重要工具,語言塑造了“敵我對立”的敘事框架。大翻譯運動(Great Translation Movement)通過選擇性翻譯中國網民的民族主義言論,將其傳播到國際社交媒體平台,引發了對中國的負面認知。這種語言操控通過情緒化表達放大了爭議性內容,加深了國際社會的文化偏見。

語言武器化的深層邏輯在於情緒化和煽動性的語言形式。西方國家常以“人權”與“民主”等正義化標籤為乾預行為辯護,合法化政治或軍事行動。白人至上主義者使用“另類右翼”等模糊標籤重塑意識形態,將傳統的帶有強烈負面含義的“白人至上主義”轉化為一個較為中立的概念,降低了該詞彙的社會抵抗力,用寬泛的“傘式”身份擴大其支持者的基礎。通過對世俗話語的滲透,仇恨政治和極端言論被正當化,逐漸形成一種政治常態。當公眾將這種政治日常化後,語言實現了真正的武器化。 [18]在尼日利亞,煽動仇恨的內容通過種族、宗教和地區話題擴散,深刻惡化了社會關係。 [19]語言的模糊性和合理否認策略也成為傳播者規避責任的有力工具,在被簡化的敘事中傳播複雜的社會和政治議題。特朗普的美國優先(America First)政策通過否定性標籤和情緒化話語,以反對全球化、質疑氣候變化科學、抨擊傳統盟友等方式,故意提出與主流意見相對立的觀點,激發公眾對全球化的不信任,重塑國家利益優先的文化認同。 [20]

三、武器化傳播的風險與挑戰:正當性與破壞性

儘管武器化傳播給國際輿論格局帶來了巨大風險,但特定情形下,其可能會被某些國家或團體通過法律、政治或道德框架賦予一定的正當性。如“9·11”事件後,美國通過《愛國法案》擴大了情報部門的監控權限,以“反恐”為名實施廣泛的信息控制,這種“正當性”常被批評為破壞公民自由,侵蝕了民主社會的核心價值。

在國際政治博弈中,武器化傳播更常被視為“灰色區域”(Gray Zone)的手段。國家間的對抗不再局限於經濟制裁或外交壓力,而是通過信息操控、社交媒體干預等非傳統方式展開。部分國家以“保護國家利益”為藉口傳播虛假信息,辯稱其行為是合規的,儘管這些行為可能在國際法上存在爭議,但往往被合理化為“反制外部威脅”的必要手段。在一些信息監管缺乏嚴格法律框架的國家,選舉的干預行為往往被容忍,甚至被視為一種“正當”的政治活動。在文化層面,某些國家通過傳播特定的文化符號和意識形態,試圖在全球範圍內塑造自身的文化影響力。西方國家常以“文化共享”和“文明傳播”為名,推動其價值觀的傳播,而在實際操作中,卻通過操控文化符號和敘事框架,削弱其他文化的認同感,導致全球文化生態的不平衡。法律框架也在一定程度上為武器化傳播的正當性提供了支持。一些國家以“反恐”和“反對極端主義”為名,通過信息審查、內容過濾等手段限制所謂“有害信息”的傳播。然而,這種正當性往往突破了道德邊界,導致信息封鎖和言論壓制。以“國家安全”為理由的信息治理,雖然在一定程度上獲得了內部認可,卻為武器化傳播的氾濫提供了空間。

相較於正當性,武器化傳播的破壞性尤為顯著。目前,武器化傳播已成為權力結構操控輿論的重要工具,其不僅扭曲了信息內容,還通過隱私侵犯、情感動員和文化滲透等方式,深刻影響了公眾認知、社會情緒以及國際關係。

(一)信息失真與認知操控

信息失真指信息在傳播過程中被故意或無意扭曲,導致公眾接收到的內容與原始信息存在顯著差異。在社交媒體上,虛假信息和誤導性內容的傳播日益猖獗,人工智能模型(如GPT)的生成內容,可能因訓練數據的偏見而加劇這一問題。性別、種族或社會偏見可能被反映在自動生成的文本中,放大信息失真的風險。社交媒體的快速傳播特性也使傳統的事實核查機制難以跟上虛假信息的擴散速度。虛假信息在短時間內往往佔據輿論主導地位,跨平台傳播和匿名性使得澄清與糾正變得更加複雜。傳播的不對稱性削弱了傳統新聞機構的權威性,公眾更傾向於相信即時更新的社交平台信息,而非傳統新聞機構的深入報導,這進一步削弱了新聞機構在抵制虛假信息中的作用。

除了信息本身的失真,武器化傳播還深刻利用了認知失調的心理機制。認知失調指個體接觸到與其已有信念或態度相衝突的信息時產生的心理不適感。傳播者通過製造認知失調,動搖目標受眾的既有態度,甚至誘導其接受新的意識形態。在政治選舉中,定向傳播負面信息常迫使選民重新審視政治立場,甚至改變投票傾向。武器化傳播通過選擇性暴露進一步加劇了“信息繭房”的形成,讓受眾傾向於接觸與自身信念一致的信息,忽視或排斥相反觀點。這不僅強化了個體的認知偏見,也讓虛假信息在群體內部快速擴散,難以被外界的事實和理性聲音打破,最終形成高度同質化的輿論生態。

(二)隱私洩露與數字監控

近年來,深度偽造技術的濫用加劇了隱私侵權問題。 2019年,“ZAO”換臉軟件因默認用戶同意肖像權而被下架,揭示了生物特徵數據的過度採集風險。用戶上傳的照片經深度學習處理後,既可能生成精確的換臉視頻,也可能成為隱私洩露的源頭。更嚴重的是,深度偽造等技術被濫用於性別暴力,多名歐美女演員的面孔被非法植入虛假性視頻並廣泛傳播,儘管平台在部分情況下會刪除這些內容,但開源程序的普及讓惡意用戶能夠輕鬆複製和分享偽造內容。此外,用戶在使用社交媒體時,往往默認授權平台訪問其設備的照片、相機、麥克風等應用權限。通過這些權限,平台不僅收集了大量個人數據,還能夠通過算法分析用戶的行為特徵、興趣偏好和社交關係,進而精準投放廣告、內容推薦甚至實施信息操控。這種大規模數據採集推動了對隱私保護的全球討論。在歐洲,《通用數據保護條例》(General Data Protection Regulation)試圖通過嚴格的數據收集和使用規定,加強個人隱私權保障。然而,由於“隱性同意”或複雜的用戶協議,平台常常繞過相關規定,使數據處理過程缺乏透明度,導致普通用戶難以了解數據的實際用途。美國《通信規範法》第230條規定,網絡平台無需為用戶生成的內容承擔法律責任,這一規定推動了平台內容審核的發展,但也使其在應對隱私侵權時缺乏動力。平台出於商業利益的考慮,往往滯後處理虛假信息和隱私問題,導致審核責任被持續擱置。

在數字監控方面,社交平台與政府的合作使用戶數據成為“監控資本主義”的核心資源。美國國家安全局(NSA)通過電話記錄、互聯網通信和社交媒體數據,實施大規模監控,並與Google、Facebook等大型企業合作,獲取用戶的在線行為數據,用於全球範圍內的情報收集和行為分析。跨國監控技術的濫用更是將隱私侵犯推向國際層面。以色列網絡安全公司NSO開發的Pegasus間諜軟件,通過“零點擊攻擊”入侵目標設備,可實時竊取私人信息和通信記錄。 2018年,沙特記者賈馬爾·卡舒吉(Jamal Khashoggi)被謀殺一案中,沙特政府通過Pegasus監聽其通信,揭示了這種技術對個體隱私和國際政治的深遠威脅。

(三)情感極化與社會分裂

情感在影響個體認知與決策中起著關鍵作用。武器化傳播通過煽動恐懼、憤怒、同情等情緒,影響理性判斷,推動公眾在情緒驅動下做出非理性反應。戰爭、暴力和民族主義常成為情感動員的主要內容,傳播者通過精心設計的議題,將愛國主義、宗教信仰等元素植入信息傳播,迅速引發公眾情感共鳴。數字技術的廣泛應用,特別是人工智能和社交媒體平台的結合,進一步放大了情感極化的風險。虛假信息與極端言論在平台上的快速傳播,不僅來自普通用戶的分享行為,更受到算法的驅動。平台傾向優先推送情緒化和互動性高的內容,這些內容常包含煽動性語言和極端觀點,從而加劇了仇恨言論和偏激觀點的傳播。

社交媒體標籤和算法推薦在情感極化中扮演著關鍵角色。在查理周刊事件後,#StopIslam標籤成為仇恨言論的傳播工具,用戶借助該標籤發布仇視和暴力傾向的信息。在美國2020年總統選舉期間,社交平台上的極端政治言論和錯誤信息也在激烈的黨派鬥爭中被放大。通過精確的情感操控,武器化傳播不僅撕裂了公共對話,還極大影響了社會的民主進程。另一種特殊的極端主義動員策略是“武器化自閉症”(Weaponized Autism),即極右翼團體利用自閉症個體的技術專長,實施情感操控。這些團體招募技術能力較強但有社交障礙的個體,通過賦予虛假的歸屬感,將其轉化為信息戰的執行者。這些個體在極端組織的指引下,被用於傳播仇恨言論、執行網絡攻擊和推動極端主義。這種現像不僅揭示了情感操控的深層機制,也表明技術如何被極端團體利用來服務於更大的政治和社會議程。 [21]

(四)信息殖民與文化滲透

“武器化相互依賴”理論(Weaponized Interdependence Theory)揭示了國家如何利用政治、經濟和信息網絡中的關鍵節點,對其他國家施加壓力。 [22]特別是在信息領域,發達國家通過控制信息流實施“信息殖民”,進一步鞏固其文化和政治優勢。數字平台成為這一殖民過程的載體,全球南方國家在信息傳播中高度依賴西方主導的技術平台和社交網絡,在撒哈拉以南非洲地區,Facebook已成為“互聯網”的代名詞。這種依賴不僅為西方企業帶來了巨大的廣告收入,還通過算法推薦對非洲本土文化和價值觀,尤其是在性別、家庭和宗教信仰等方面,產生了深遠影響,使文化滲透成為常態。

數字不平等是信息殖民的另一表現。發達國家在數字技術和信息資源上的主導地位,使南方國家在經濟、教育和文化領域日益邊緣化。巴勒斯坦因基礎設施不足和技術封鎖,難以有效融入全球數字經濟,既限制了本地經濟發展,又進一步削弱了其在全球信息傳播中的話語權。全球主要經濟體和信息強國通過技術封鎖和經濟制裁,限制他國獲取關鍵技術與創新資源,這不僅阻礙了目標國的科技發展,也加劇了全球技術與創新生態的斷裂。自2018年退出《伊朗核協議》以來,美國對伊朗的經濟制裁導致其在半導體和5G領域發展受阻,技術與創新的不對稱拉大了全球技術生態的差距,使許多國家在信息競爭中處於劣勢。

四、反思與討論:非對稱傳播格局中的話語權爭奪

在國際非對稱傳播(Asymmetric Communication)競爭格局下,強勢方常常通過主流媒體和國際新聞機構等渠道佔據輿論的主導地位,而弱勢方則需要藉助創新傳播技術和手段來彌補劣勢,爭奪話語權。這一傳播格局的核心在於信息地緣政治(Information Geopolitics),即國家之間的權力較量不僅僅取決於地理位置、軍事力量或經濟資源,更取決於對信息、數據和技術的控制。大國間的博弈已不再僅限於物理空間的控制,而擴展至輿論空間的爭奪。這些“信息景觀”涉及全球傳播生態中的話語權、信息流通和媒體影響力等,在這一過程中,國家通過不斷製造景觀,以影響國際輿論、塑造全球認知框架,進而實現其戰略目標。非對稱傳播的策略不僅關乎信息內容的傳遞,更重要的是如何借助各種傳播技術、平台和手段彌補資源與能力上的差距,信息傳播的核心不再局限於內容本身,而圍繞著話語權的爭奪展開。隨著信息戰和認知戰的興起,誰掌握了信息,誰就能在全球競爭中占得先機。

(一)後發優勢下的技術赶超

傳統的大國或強勢傳播者掌控著全球輿論的主導權,相比之下,弱勢國家往往缺乏與這些大國抗衡的傳播渠道。後發優勢理論主張後發國家能夠通過跳躍式發展,繞過傳統的技術路徑,引進現有的先進技術和知識,從而迅速崛起並規避早期技術創新中的低效和過時環節。在武器化傳播的背景下,這一理論為信息弱國提供了通過新興科技突破大國傳播壁壘的路徑,有助於其在技術層面上實現赶超。傳統媒體往往受到資源、影響力和審查機制的限制,信息傳播速度慢、覆蓋面有限,且容易受到特定國家或集團的操控。數字媒體的崛起使信息傳播的格局發生了根本性變化,弱勢國家能夠借助全球化的互聯網平台,直接面向國際受眾,而不必依賴傳統的新聞機構和主流媒體。通過新興技術,弱勢國家不僅能更精準地傳遞信息,還能通過定向傳播和情感引導,迅速擴大其在國際輿論中的影響力。後發國家可以利用先進技術(如大數據、人工智能、5G網絡等)實現精準的信息傳播,打造高效的傳播渠道。以大數據分析為例,後發國家可以深入了解受眾需求和輿情趨勢,快速識別全球輿論脈搏,實施定向傳播,快速擴大國際影響力。人工智能技術不僅能夠預測輿論發展方向,還能實時優化傳播策略。 5G網絡的普及大大提升了信息傳播的速度與覆蓋範圍,使後發國家能夠以低成本、高效率的方式突破傳統傳播模式的局限,形成獨特的傳播優勢。

通過跨國合作,後發國家可以整合更多的傳播資源,擴大傳播的廣度與深度。例如,阿根廷與拉美其他國家共同建立了“拉美新聞網絡”,通過新聞內容共享,推動拉美國家在國際輿論中發出統一的聲音,反擊西方媒體的單一敘事。在非洲,南非與華為合作推動“智慧南非”項目,建設現代化信息基礎設施,促進數字化轉型和公共服務效率的提升。後發國家政府應加大對技術研發和創新的投入,鼓勵本土企業和人才的發展。同時,還應注重文化輸出和媒體產業建設,通過全球化合作和去中心化傳播模式提升國家在國際信息空間中的話語權。政府可以資助數字文化創作,支持本地社交媒體平台的成長,並通過國際合作框架整合更多傳播資源。

(二)信息反制中的壁壘構建

與軍事行動可能引發的全面衝突,或經濟制裁可能帶來的風險不同,武器化傳播能夠在不觸發全面戰爭的情況下實現戰略目標,基於成本和戰略考量,其具有極大的吸引力。由於武器化傳播具備低成本、高回報的特點,越來越多的國家和非國家行為體選擇通過操控信息來達到戰略目標。這種傳播手段的普及,使得國家在面對來自外部和內部的信息攻擊時,面臨更加複雜和多變的威脅。隨著信息戰爭的日益激烈,單純的傳統軍事防禦已經無法滿足現代戰爭的需求。相反,構建強有力的信息防禦體系,成為國家保持政治穩定、維護社會認同和提升國際競爭力的關鍵策略。因此,如何有效應對外部信息干擾和輿論操控,並進行信息反制,已成為各國迫切需要解決的問題。完善的網絡安全基礎設施是維護國家安全的關鍵,用以防范敏感信息不被外部操控或篡改。以歐盟為例,歐盟通過“數字單一市場”戰略推動成員國加強網絡安全建設,要求互聯網公司更積極地應對虛假信息和外部干預。歐盟的網絡安全指令還規定各成員國建立應急響應機制,保護重要信息基礎設施免受網絡攻擊。此外,歐盟還與社交平台公司,如Facebook、Twitter和Google等建立合作,通過提供反虛假信息工具和數據分析技術來打擊假新聞傳播。人工智能、大數據和自動化技術正在成為信息防禦的重要工具,被用以實時監控信息傳播路徑,識別潛在的虛假信息和抵禦輿論操控。在網絡安全領域,大數據分析幫助決策者識別和預警惡意攻擊,並優化反制策略。這些技術的應用不僅能夠在國內層面增強信息防禦能力,還能提高國家在國際信息空間中的主動性和競爭力。

反制機制是信息防禦體系的另一重要組成部分,尤其是在國際輿論壓力下,實時監控外部信息傳播並及時糾正虛假信息成為維護輿論主動權的關鍵。烏克蘭自2014年克里米亞危機以來,通過與北約和美國合作,建立了頗具規模的網絡防禦體系。烏克蘭的國家網絡安全局為應對網絡威脅設立了“信息反制小組”,利用社交媒體和新聞發布平台實時駁斥俄羅斯的虛假報導,這一策略顯著提升了烏克蘭在國際輿論中的聲譽和信任度。

(三)輿論引導中的議程設置

在信息化和數字化的全球競爭格局中,輿論引導不僅涉及信息傳播內容,更關鍵的是如何設置議程並聚焦全球關注的熱點話題。議程設置理論表明,誰能掌控信息流通的議題,誰就能引導輿論的方向。議程設置通過控制話題的討論範圍和焦點,影響公眾對事件的關注與評價,社交媒體的興起為信息弱勢國提供了突破口,使其可以通過多平台聯動來爭奪信息傳播的主導權。以烏克蘭為例,其在俄烏戰爭中通過社交媒體傳播戰爭實況,不僅發布戰鬥實況,還融入民眾的情感訴求,借助平民遭遇和城市破壞的悲情敘事,激發國際社會的同情與關注。在抵禦外部信息干擾的同時,國家還需要主動傳播正面敘事,講述能夠引發國際社會共鳴的文化故事。故事應該符合國際輿論的情感需求,同時展現國家的獨特性,強化與國際社會的聯繫。以我國的“一帶一路”共建為例,在“一帶一路”共建國家,我國投資建設了大量基礎設施項目,這些項目不僅幫助改善了當地的經濟基礎條件,也展示了中國在全球化進程中的責任擔當,更為文化合作和交流活動提供了窗口,向世界展示了中華民族豐富的歷史文化,為國際社會展現了中華文化的包容性和責任感。

但由於全球南方國家往往面臨資源、技術與國際傳播平台的限制,難以直接與發達國家競爭,因此它們依賴更加靈活、創新的傳播手段來參與全球議程的設置。例如,巴西在應對環保和氣候變化議題上,尤其是亞馬遜森林的砍伐問題,面臨來自西方媒體的負面輿論壓力。為此,巴西政府利用社交媒體發布關於亞馬遜保護的最新數據和成功案例,積極塑造國家在環境保護領域的形象。同時,巴西通過與其他發展中國家合作,參與全球氣候變化談判,推動南南合作,增強了在氣候問題上的話語權。大型國際事件、人道主義活動和製作文化產品等,也是講述國家故事的有效方式。國際體育賽事如世界杯、奧運會等,不僅是體育競技的展示平台,更是國家形象和文化軟實力的展現場所,通過承辦或積極參與這些全球性事件,國家能夠向世界展示其實力、價值和文化魅力,推動積極的輿論議程。

“戰爭無非是政治通過另一種手段的延續”[23]。這一克勞塞維茨的經典論斷在武器化傳播的語境下得到了現代化的詮釋。武器化傳播突破了傳統戰爭的物理邊界,成為一種融合信息戰、認知戰和心理戰的現代戰略手段。它以非暴力的形式操控信息流向和公眾認知,使國家和非國家行為者無須依賴直接軍事行動即可實現政治目標,體現出極強的戰略性和目標性。通過操控信息、情緒和價值觀,武器化傳播能夠在避免全面戰爭的同時達成戰略目的,在全球競爭和衝突中,已成為強國對弱國進行政治壓制的重要手段。

武器化傳播的核心在於通過信息操控削弱敵方的決策力與行動能力,但其複雜性使得傳播效果難以完全預測。儘管信息強國通過技術優勢和傳播渠道壓制信息弱國,傳播效果卻充滿不確定性。尤其是在社交媒體和數字平台全球化的背景下,信息流動的邊界和效果愈加難以控制。這種複雜性為弱國提供了突破話語霸權的機會,推動信息傳播的反向博弈。弱國可以利用這些平台發起對抗,挑戰強國的信息操控,在全球輿論中佔據一席之地。非對稱性博弈反映了國際輿論的動態平衡,傳播不再是單向的控制,而是更為複雜的交互和對話,賦予弱者影響輿論的可能性。當前國際輿論格局仍以信息強國對信息弱國的單向壓制為主,但這一局面並非不可打破。信息戰爭具有高度的不對稱性,信息弱國可以憑藉技術創新、靈活策略和跨國合作逐步反制。通過發揮“非對稱優勢”,弱國不僅能夠影響全球輿論,還能藉助聯合行動和信息共享提升話語權。跨國合作與地區聯盟的建立,為弱國提供了反制強國的有力工具,使其能夠在國際輿論上形成合力,挑戰信息強國的主導地位。在戰爭框架下,各國可以靈活調整策略,主動塑造信息傳播格局,而非被動接受強國的信息操控。

戰爭社會學強調社會結構、文化認同和群體行為在戰爭中的作用。武器化傳播不僅是軍事或政治行為的延續,更深刻影響社會心理、群體情感和文化認同。強國利用信息傳播塑造他國的認知與態度,以實現自己的戰略目標。然而,從社會學視角來看,武器化傳播並非單向的壓制,而是複雜的社會互動和文化反應的產物。在這一過程中,信息弱國並非完全處於弱勢,相反,它們可以藉助文化傳播、社會動員和全球輿論的動態對抗,以“軟實力”反擊外部操控,塑造新的集體認同,展示“弱者武器”的正當性。

(基金項目:研究闡釋黨的二十屆三中全會精神國家社科基金重大專項“推進新聞宣傳和網絡輿論一體化管理研究”(項目編號:24ZDA084)的研究成果)

References:

[1] Lasswell H D Propaganda techniques in the world wars [M] Beijing: Renmin University Press, 2003